LATEST UPDATE: blog.qryn.dev/chdb-clickhouse-as-a-library

ClickHouse, The Database

ClickHouse is our database of choice and the baseline we love to use for all of our products at qxip/metrico including our observability tools qryn and hepic.

We 💛 ClickHouse - Alexey Milovidov and his Team built a fantastic community around the project, later joined by VC partners to form ClickHouse Inc.

ClickHouse Life

For most of us ClickHouse is deployed as a standard database service.

Having an always-on server or cluster is very much required by most deployments and integrations for of this amazing technology coupling high-speed storage and processing. This means predictable costs and performance for those maximizing their own infrastructure.

There are also many great Clickhouse Hosting companies we work with delivering state-of-the-art managed service instances and great benchmark results with predictable costs at any scale - all backed by extremely skilled technical teams.

💜 Gigapipe (featuring a hosted qryn:cloud integration)

💙 Altinity (supporting the community and many of our customers)

💚 DoubleCloud (very kind sponsor of our public demo resources)

Life is good. But...

Sometimes it would be nice to just run a query against our own cloud data without the need for a dedicated ClickHouse server in standby. This would have an infinite amount of both practical and educational purposes.

Do we let this one go, or...

Let's Serverless

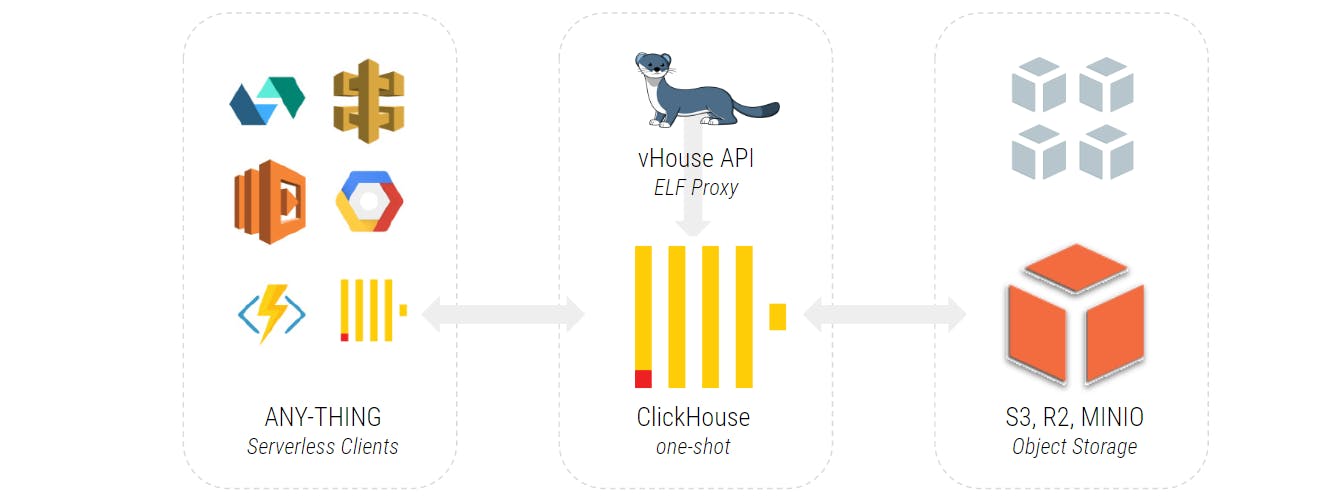

We'll keep the complexities for later. All we want is a stateless function we can call when needed with a fast API to query our remote S3/URL storage, in one-shot. We want to use the latest clickhouse codebase, so no major patches or changes are allowed - a good prototype should be easy to port and recreate in other languages. K.I.S.S. We're going back to the roots of ClickHouse.

Hello, ClickHouse-Local

The

clickhouse-localprogram performs fast processing on local files, without having to deploy a ClickHouse server.clickhouse-localsupports most features, formats and table engines but has no data or storage

Great! clickhouse-local is a stripped down build of clickhouse itself and can be greatly customized and extended (or shrunk) to meet specific feature profiles. This is just the perfect building block for our prototype, but it needs a bit of love ...

The Good

👍 standalone clickhouse functionality

👍 support for remote storage (s3, urleng, etc)

👍 easy overlay API with any language (node, go, python)

👍 as fast as clickhouse and zero cpu waste

The Bad

👎 too many components redundant to the serverless role

👎 dynamic ELF won't work on lambdas and serverless (docker excluded)

👎 static ELF binaries bake super large (~200mb uncompressed)

👎 slow ELF startup time when compressed (~40mb w/ upx)

Slim Faster

In order to work our new binary, we'll have to strip things down a little and create a portable clickhouse binary without the server parts, maintaining the functionality required for working with remote data. ClickHouse Issue #29378 helped lots.

Less is More 🍈🍉🍋

Let's start by disabling optional features using available cmake build parameters $ cmake .. \ -DCMAKE_BUILD_TYPE=Release \ -DENABLE_CLICKHOUSE_ALL=OFF \ -DENABLE_CLICKHOUSE_LOCAL=ON \ -DENABLE_CLICKHOUSE_SELF_EXTRACTING=OFF \ -DENABLE_CLICKHOUSE_BENCHMARK=OFF \ -DENABLE_CLICKHOUSE_KEEPER=OFF \ -DENABLE_CLICKHOUSE_KEEPER_CONVERTER=OFF \ -DENABLE_CLICKHOUSE_INSTALL=OFF \ -DENABLE_CLICKHOUSE_TEST=OFF \ -DENABLE_CLICKHOUSE_COPIER=OFF \ -DENABLE_CLICKHOUSE_COMPRESSOR=OFF \ -DENABLE_CLICKHOUSE_STATIC_FILES_DISK_UPLOADER=OFF \ -DENABLE_CLICKHOUSE_ODBC_BRIDGE=OFF \ -DENABLE_AVX=ON \ -DENABLE_AVX2=OFF \ -DENABLE_LIBRARIES=OFF \ -DENABLE_PROTOBUF=OFF \ -DENABLE_SIMDJSON=ON \ -DENABLE_RAPIDJSON=ON \ -DENABLE_UTILS=OFF \ -DUSE_UNWIND=ON \ -DUSE_SENTRY=0 \ -DUSE_STATIC_LIBRARIES=ON \ -DUSE_AWS_S3=ON \ -DENABLE_TESTS=OFF \ -DENABLE_EMBEDDED_COMPILER=0 \ -DENABLE_RDKAFKA=0 \ -DENABLE_POCO_MONGODB=0 \ -DENABLE_POCO_REDIS=0 \ -DENABLE_ODBC=0 \ -DENABLE_MYSQL=0 \ -DENABLE_S3=1 \ -DENABLE_GRPC=0 \ -DENABLE_HDFS=0 \ -DENABLE_AZURE_BLOB_STORAGE=0 \ -DENABLE_CASSANDRA=0 \ -DENABLE_KRB5=0 \ -DENABLE_ODBC=0 \ -DENABLE_AMQPCPP=0 \ -DENABLE_LDAP=0 \ -DENABLE_CCACHE=0 \ -DENABLE_SSL=ON

This is just a reference. There are many further optimizations possible

AVX2must be disabled for our function to run on virtual/emulated CPUs

💀 PR_SET_NAME

A minor road blocker is the lack of support for PR_SET_NAME on AWS Lambdas.

Pending PR, let's patch our build to allow this function to fail unharmed $ sed -i '/Cannot set thread name/c\' /ClickHouse/src/Common/setThreadName.cpp

Now we're ready to compile (with Docker alpine in our case) and patiently wait

Alpine clang version 14.0.6

Target: x86_64-alpine-linux-musl

$ ./ClickHouse/build/programs/clickhouse

Use one of the following commands:

clickhouse local [args]

✨ Nice. Our portable clickhouse binary is ready to run almost anywhere!

Pinocchio Layer

In order for our custom binary to work in real life we'll need to lie to our clients and wrap everything with a thin API compatibility layer (a technique we mastered while developing qryn and fluxpipe) to spawn process executions and handle incoming queries.

If you're thinking "What? We just removed this from ClickHouse and now we're adding it back?" - that is precisely what we're doing with this ELF proxy hybrid

To avoid reading/decompressing the file each time we need it and to minimize startup time and disk reads, we will also load the compressed binary in anonymous memory (memfd) and execute via a shared descriptor for the lifetime of the container or process execution. Just like a friendly virus! ⚠️

V is in the House

Let's live on the edge and write our wrapper HTTP API in ~30 lines of vlang and then compile it into a tiny static executable < 100kb

Same can be achieved with NodeJS, Python, etc... choose your own poison

vhouse.v

module main

import os

import memfd

import vweb

struct App {

vweb.Context

}

fn (mut app App) index() vweb.Result {

query := app.query['query'] or { '' }

if query == '' { return app.html('No Query') }

args := ['/proc/$os.getpid()/fd/3', 'local', '-q', '"' + query + '"']

result := os.execute(args.join(' '))

return app.text(result.output.str())

}

fn main() {

data := os.read_file('./clickhouse') or {

panic('error reading ELF')

return

}

res := memfd.vmemfd_new('clickhouse')

os.fd_write(res, data)

vweb.run(&App{}, 8123)

}

That's all we need, one GET handler. Embedding a POST play is optional.

😉 hidden bonus: no chunked responses or NDJSON handlers!

Let's compile and test our application using v

Compile a static binary

$ v -prod vhouse.v

Execute in the same folder as our custom clickhouse

$ ./vhouse

[vweb] Running app on http://localhost:8123/

Test with CURL

$ curl -G "http://localhost:8123" --data-urlencode "query=SELECT version()"

22.9.1.1

Great. But is it fast enough?

{

"elapsed": 0.000624628,

"rows_read": 0,

"bytes_read": 0

}

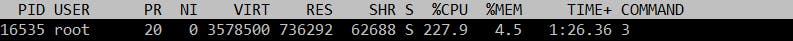

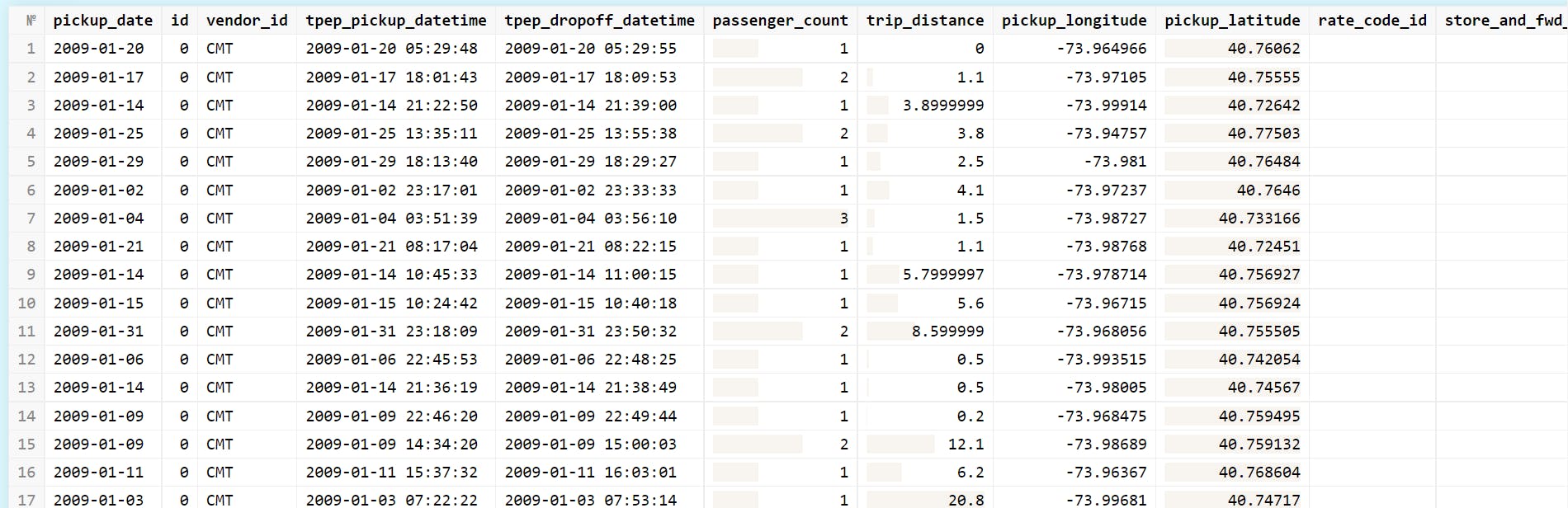

That's promising, now let's try with some real data off a public S3 Bucket!

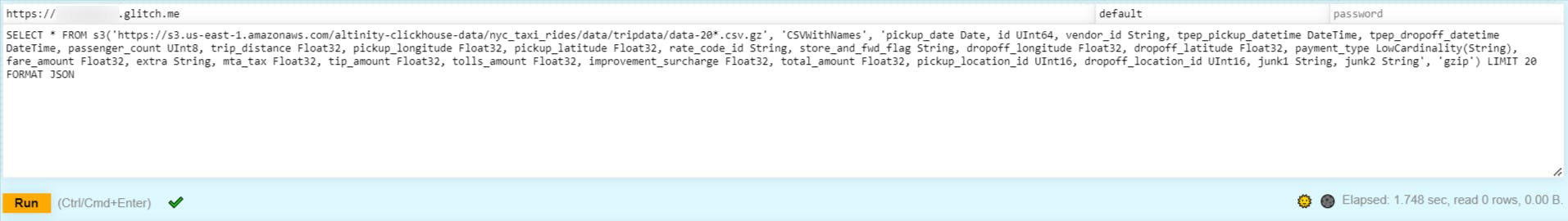

$ curl -G "http://localhost:8123" \

--data-urlencode "query=SELECT * FROM s3('https://s3.us-east-1.amazonaws.com/altinity-clickhouse-data/nyc_taxi_rides/data/tripdata/data-20*.csv.gz', 'CSVWithNames', 'pickup_date Date, id UInt64, vendor_id String, tpep_pickup_datetime DateTime, tpep_dropoff_datetime DateTime, passenger_count UInt8, trip_distance Float32, pickup_longitude Float32, pickup_latitude Float32, rate_code_id String, store_and_fwd_flag String, dropoff_longitude Float32, dropoff_latitude Float32, payment_type LowCardinality(String), fare_amount Float32, extra String, mta_tax Float32, tip_amount Float32, tolls_amount Float32, improvement_surcharge Float32, total_amount Float32, pickup_location_id UInt16, dropoff_location_id UInt16, junk1 String, junk2 String', 'gzip') LIMIT 20 FORMAT JSON"

✨ There we go, now that's something!

{

"elapsed": 1.195765097,

"rows_read": 0,

"bytes_read": 0

}

Your request mileage may vary - it should take about 1-2s to complete the average S3 query, depending on the compute/network factor. The row and bytes counters will be 0 until we add a few HTTP headers later on.

Moment of Truth

✨ glitch app

Let's run our newborn function on a free glitch instance and compare

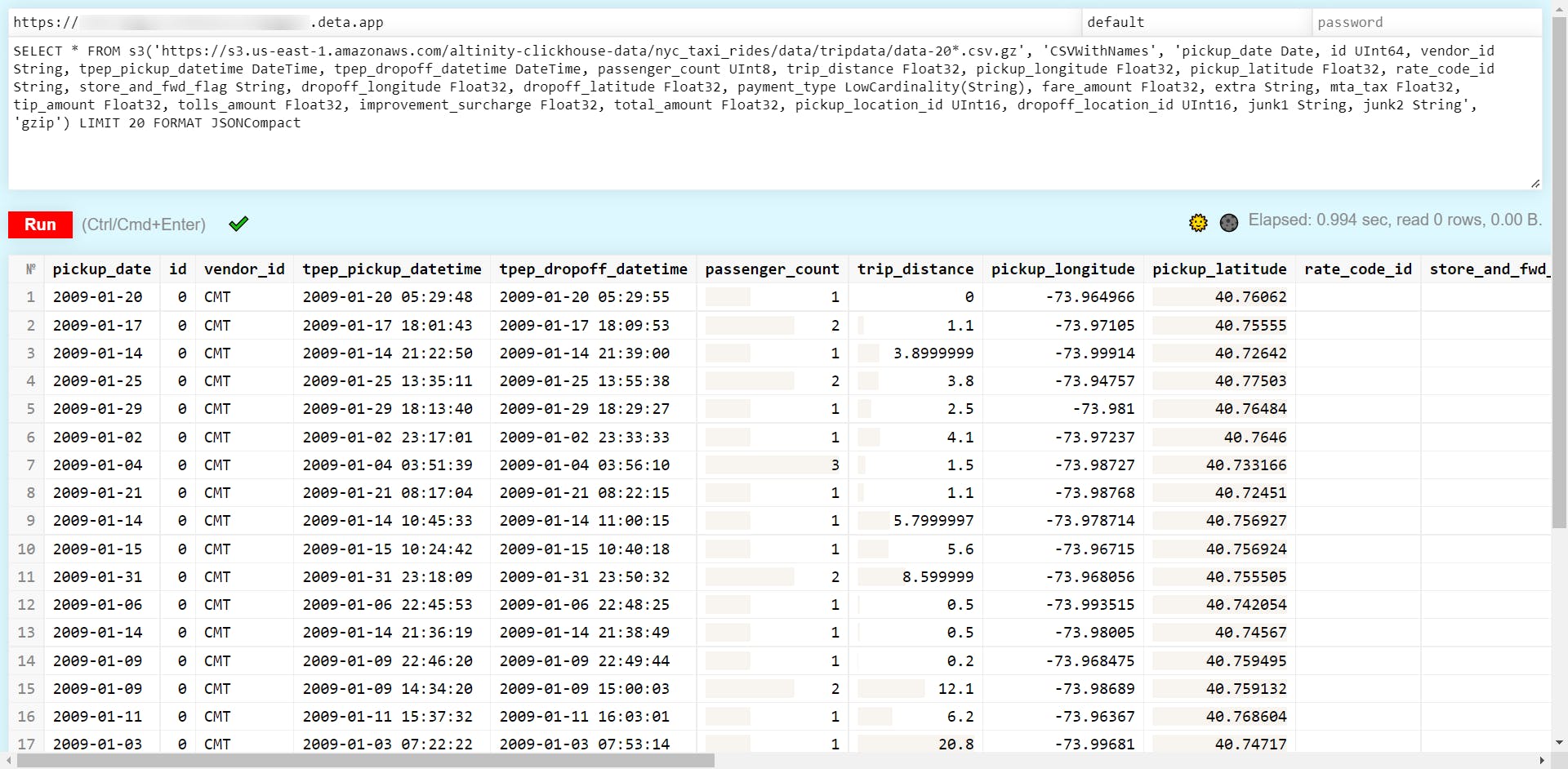

✨ deta micro

Let's deploy on deta space and push this even further!

That's just as fast as other "serverless" commercial options - and 100% free!

Not bad for our poor man's wrapper running on free resources!

💀 Lazy Mode = ON

Try it out right now using docker. The image includes the play app, too! docker run -d --name vhouse -p 8123:8080 --rm qxip/vhouse:22.9.1.1

💸 No S3? No Problem!

Try querying using our free URLENG service or Cloudflare R2 endpoints sql SELECT count() from url('https://urleng.com/loadtest', JSONEachRow)

Live Demo

This is not an image. It's a real Clickhouse serverless shell.

Conclusion

Did that just work? Yes, with a bundled HTTP ClickHouse client, too 🖖

You now have a hybrid serverless clickhouse function you can have fun chaining into your logic for fun and profit 🚀 We have a fleet of these babies running on glitch, aws lambda and deta space just to mention a few low-powered resources you can leverage in your experiments.

At qxip/metrico we use this to power our demos without requiring a backend.

This is by no mean trying to challenge ClickHouse Cloud or their products. It's just a fun prototype for those interested in building hybrid API wrappers the wrong way. Modifying ClickHouse local to expose a pico server function would also be possible, but outside the scope of this clickhacking experiment.

What's Next?

There's a lot more coming!

We're working on more pieces for this puzzle, such as Lambda templates, FLUX piping, S3 and URLENG + Deta storage bundles to provide truly personal, free forever serverless ClickHouse instances for anyone learning the art and for those building new cool ideas with it.

Enjoy and Share your Comments! 👋

Did this get you excited? Join our team, we're hiring!

Working with ClickHouse? Try our polyglot observability stack